Success Story Regiocom: GDPR compliant Archive Automation with BatchMan

The new EU General Data Protection Regulation (GDPR) has been in force since May 25, 2018. Since then, every company that collects and stores data must adapt its entire data management to it. Non-compliance can result in penalties of up to 4% of annual turnover. A British hotel chain, for example, had to pay a fine of 110 million euros because customer data was disclosed. In many companies, especially in IT, this legal change has led to major technical challenges in dealing with personal data: for example, how long this data may be stored and archived, and after what period of time it must in turn be deleted. This creates a need for new deletion and archiving services to process data retention through deletion processes in line with the GDPR.

Such an archiving service should also be automated at regiocom, the full service provider for the energy industry. regiocom SE has been a partner of the energy industry since 1996 and, as regiocom Group, is the largest group-independent provider of process services in the German-speaking region. Deletion and archiving processes in accordance with the GDPR have to be carried out there on a regular basis. In addition, the archive stock of the last 10 years had to be reprocessed as well.

By automating and checking the archive at regiocom, BatchMan prevents gaps in the process and ensures compliance requirements. Processes can be monitored centrally and easily, and processing errors become immediately visible. Control loops are used to achieve optimal utilization of the archive while preventing overloading.

Industry:

Utilities, Telecommunications

Use Case:

Deletion and Archiving service

Advantages:

At peak times, BatchMan saves up to 90% buffer times and comprehensively relieves the service team.

The initial situation: prescribed deletion periods for the SAP archives

regiocom has been practicing the “almost paperless” office for a long time: correspondence with clients and customers served by regiocom’s service center is archived exclusively electronically. This applies to both incoming and outgoing mail as well as notes on telephone calls, changes to personal data, meter readings or contract contents received via web portals.

All communication is loaded into a document archive via SAP and via external systems. All documents are linked as contacts to the corresponding customer masters.

With the introduction of the GDPR in 2018, there was a new requirement to work through the mandatory deletion periods of personal data in the archives, i.e. for new requests that were archived and for those that had already been archived in the past. The identified need resulted in the requirement of ‘cleaning’ the archived data files of the last 10 years.

The Challenge in SAP

- One archive, but many clients from multiple SAP systems accessing this archive, plus external systems.

- Writing or deleting all clients bulk data (batch) at the same time without overloading the archive.

With the actual job processing in SAP, the only way to avoid this overload was to schedule these jobs with time buffers in advance. Here, however, the problem arose that one never knew how large the data volume would be at a specific time due to very large fluctuations. Either the interval between the individual jobs was very long, in which case you were on the safe side, but you ran the risk of not getting all the data through in one cycle and sometimes having very large gaps (idle time) between the jobs. If you chose a smaller distance, you ran the risk of overloading the archive.

BatchMan as a solution for seamless scheduling of archive batches

At peak times, regiocom generates 200,000 contacts per week in one client, about half of which have attachments that are stored in the archive. To avoid overloading the system, work packages of 5,000 inquiries are created, which are then sequentially transferred from SAP to the archive system. This can result in as many as 40 work packages at peak times. With a purely time-controlled solution in SAP-SM37, this would mean buffer times of up to 160 hours or require permanent monitoring by an employee. Time and resource constraints were not viable with this model, so regiocom wanted to find a more efficient approach to planning.

In this case, the “job nets” in BatchMan were the obvious choice. regiocom had already decided in favor of job scheduling with BatchMan, since time buffers and manual handling as in SAP-SM37 are no longer necessary. In the case of the archive batches, it was thus possible to schedule the jobs one after the other without gaps, without wasting time or overloading the archive.

The use of BatchMan enables potential savings of up to 230 minutes (approx. 4 hours) per work package, as the previously scheduled time buffers are no longer required. From now on, automation not only speeds up processes immensely, but also eliminates the need for personnel to be on standby to intervene in a processing error.

A clean update of delimited periods was also a particular challenge in SAP. Regiocom was able to implement this in BatchMan via the selection variables (TVARV).

Hereby regiocom also succeeded in solving the 2nd important problem – the reduction of the “backlog” during archiving/deletion.

Dismantling of the Archive

A backlog of 10 years should be reduced in a short period of time. For this purpose, one can, for example, use an employee who starts the jobs, waits until they are finished, then adjusts the parameters and then starts the next run. But this would tie up too many resources. That’s where the idea of automation comes in: reducing the workload of the employees and shortening the processes without errors.

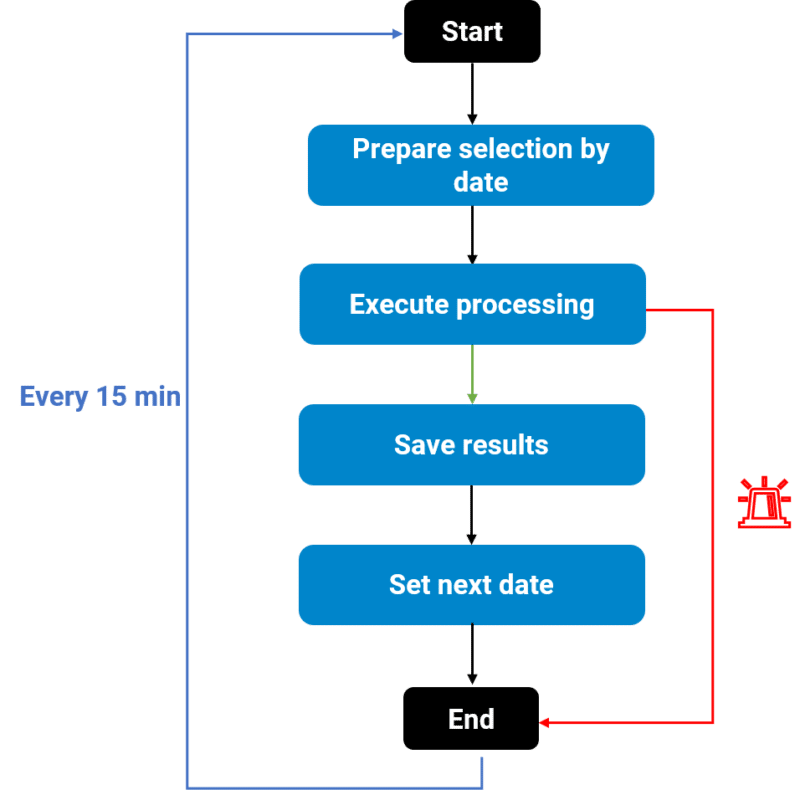

Since very large amounts of data had to be processed in a short period of time without overloading the system, the SAP on-board resources, i.e. a purely time-controlled solution, were also limited in this case. Therefore, regiocom created the following job network structure in BatchMan:

The actual checking and building of links to ILM (reprocessing of legacy data) was limited to an interval of 24 hours. More was not possible, otherwise the archive would have shut down due to overload (too many requests in too short a time).

The other jobs only served to shift the interval if the processing was OK. This network was started in a “time periodic repeat job”, with an interval of 15 min (between 8 a.m. and 8. p.m. No start was done at night, because other batch jobs were running against the archive.

The job was timed (several days) as there was no termination condition. Thus, during the day, one day was processed every 15 min. To avoid parallel runs, the attribute “parallel” was set to “no”. This served as a functional pattern for the “reduction of backlogs”.

These techniques meant that both SAP Basis and the specialist support staff only had to deal with this problem sporadically, i.e. only in the event of an error indicated by a mail, or for regular control purposes.

At regiocom, the volume involved in reducing the backlog in the archive amounted to several billion data records for a wide variety of interdependent objects.

In addition to the actual “archiving objects”, regiocom thus also controls IDOC archiving/deletion, since deletion largely amounts to “pseudo-archiving”. So an archiving in a “dummy archive”, so that all links can be deleted according to the rules. Thus, an archive file is written to the local disk system and, after deletion in SAP, is also deleted there.

The use of BatchMan at regiocom now ensures the optimal amount of loading into the archive in order to achieve the maximum possible benefit and without overloading the system. The specifications for the deletion and archiving services in terms of the GDPR could thus be fully guaranteed and the backlog of the archive of the last 10 years could be gradually reduced.